Rendering for Robots (Synthetic AI training data with Blender for computer vision)

· By Richard

We've been 3D rendering for all sorts of applications. The most common theme associated with 3D rendering is: 3D animations. Maybe some CGI or VFX, but it's all pretty similar. It's all about images created for humans to view.

However, recently we've been using Blender in a very different way. We've been busy rendering synthetic training data for computer vision algorithms (artificial intelligence). This means the rendered images are not meant for humans, but for computers 🤖

In this article I want to cover this rather different way of using Blender. It may sound complicated but I'll try to keep it simple, and keep the AI concepts to a minimum.

For any 3D artists out there who are afraid AI will take over the art world. (And maybe even their job.) This could be a way to join the revolution :)

Deep Learning

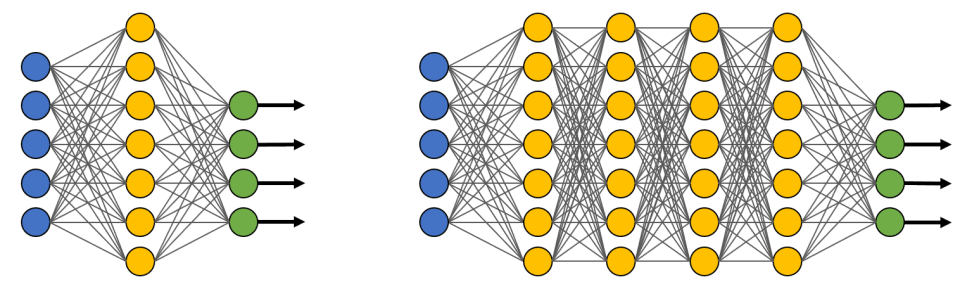

Let's start with the concept of neural networks and "deep learning" that transformed AI in the last decade. Neural networks have been used long before this, and they sure were able to solve problems in certain contexts. But we didn't start adopting neural nets at the scale we do now. This is because our hardware had to catch up with the intense amounts of compute power they demand.

It turns out we can solve a lot of hard pattern recognition problems with huge neural networks. If it doesn't work, you can often just keep throwing bigger/deeper neural networks at it until it does. The "deeper" the network, the more complex the patterns it can learn.

Once the compute power was available to actually do this, things started to take off.

Neural networks are being used for many different applications now: Voice recognition, speech generation, language translation, image recognition, to name a few. We'll focus on image recognition as that's where Blender can be useful.

Although deep learning works surprisingly well, one of its weaknesses is that it requires a LOT of data to train on. As a general rule, the bigger/deeper the neural network, the more data it needs to "learn" and do something useful.

Training Data

What is a lot of data? Think in the order of hundreds of thousands of training examples (this can vary a lot depending on the type of network you're training). In case of computer vision, a "training example" is usually just an image, combined with some sort of "label" to tell the AI what to associate the image with.

Getting your hands on huge amounts of training data can get very expensive and time consuming. You basically need a lot of pictures/photos that have been labelled (by humans). These labels are required to give the AI model something to learn from.

A label could simply be: "this is an image of a cat", but it can also be another image for some more fancy AI models.

If real world labelled photos are expensive to get your hands on, is there maybe another way we can generate (photo-realistic) images?

Blender 3D

You guessed it, Blender is more than capable of rendering photo-realistic images with Cycles:

(credits at the end of the article)

And, as a bonus, most things in Blender can be automated. This allows us to generate huge amounts of images very efficiently.

Second bonus: Creating the labels is usually a lot easier with rendered images than with real photos. You know what you are rendering, so providing a text based label is pretty trivial.

If the label is not text based but something else, like another image, it can also be easily created with Blender. For example, a model that's trained to generate depth maps needs an example of the actual depth map of the image. This is very trivial to generate with the Z-depth render pass in Blender.

Creating many variations

Blender is perfect for creating a lot of variations.

For example: If you want to train an AI to recognise images of donuts, you need many images of all sorts of different donuts.

(you'd also need images of other stuff to learn what's not a donut, but we'll focus on donuts for now)

So if you have a bunch of different looking donuts, some different backgrounds, a few lighting setups, and various camera angles, you can generate thousands of photorealistic images of donuts. All different because you can create unique combinations of the donut, background, etc.

Render Farm

Once you can generate thousands of images with the push of a button, all that's left is the rendering. Rendering can also get quite time consuming. Especially if it needs to be high quality and photorealistic. But that's what Blendergrid is good at.

Blendergrid's purpose is to speed up 3D rendering. We take large scale render jobs and run them on our render farm: Data centers all over the world with servers that run Blender, to get it done fast.

(If it wasn't for the heavy rendering this requires, I probably wouldn't have been writing about it here.)

Are you a 3D artist?

If you are a Blender artist looking to provide value and solve problems working with Blender professionally, this might be an interesting angle to look into.

You don't need to become an expert in artificial intelligence to do something useful here. If you know what a certain AI application needs, you can start creating this by being creative in Blender.

What it usually comes down to is being able to generate many different examples of a certain "thing" or "concept" that the AI model can learn from.

Are you a data scientists or AI developer?

If you are developing computer vision models, you can make the acquisition of high quality training data a lot more efficient with Blender.

If you don't know how to use Blender but you are interested in exploring the use of synthetic training data, feel free to reach out to us. We have a large network of 3D artists that we can connect you with to start creating AI synthetic training data.

Credits

Credits to the following blenderartists.org projects: